From One-Shot Answers to Agentic Systems

A couple years ago, if you wanted an AI to help you, it was almost always a simple Q&A:

-

You typed a prompt into ChatGPT, got a one-off answer.

-

Maybe you used “RAG” (retrieval augmented generation) to ground that answer in company docs added to the prompt context.

But as soon as you wanted your AI to act—to actually book a meeting, run a workflow, or coordinate steps across systems—the limitations became obvious.

Why? Because Q&A models don’t do. They don’t plan. They don’t adapt mid-process. They don’t remember context well.

They just respond.

That’s why Agentic AI is suddenly everywhere—from Forbes to GitHub to Microsoft’s Build conference. The buzz isn’t just about “agents”—it’s about a new stack where AIs can think, plan, coordinate, and act in ways that feel much more autonomous and robust.

Why AI Needs to Go Beyond Just Answering

-

Simple Q&A: Good for static info or facts.

-

RAG: Better, since it grounds answers in your docs, descreasing hallucinations.

-

Agentic AI: Needed for real-world workflows that require reasoning, memory, tool use, and adapting as things change.

Example:

A RAG-powered bot can tell you your refund policy.

An Agentic system can:

- Look up your order history

- Read the refund policy

- Consider filing the refund

- Email you a summary

- Schedule a follow-up if it fails

Bottom line: For anything more than “what is…” or “summarize this,” you probably need an agentic approach.

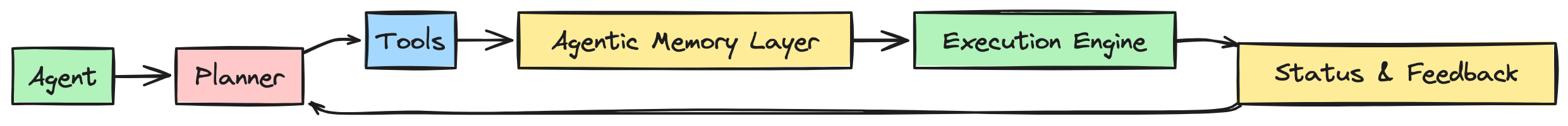

Core Architecture of Agentic AI Systems

The agentic stack is more than just “LLM + tools.” Let’s break it down:

-

The Agent receives a task or request from the user.

-

The Planner figures out what steps need to be taken to complete the task.

-

The planner chooses which Tools (like APIs or functions) to use for each step.

-

As the agent uses tools, it checks the Agentic Memory Layer to remember what has happened and keep track of relevant information.

-

The Execution Engine carries out the steps and actions required for the task.

-

The agent reviews the Status & Feedback from those actions to see if things worked as expected.

-

If needed, the agent loops back to the planner to adjust the plan based on what happened, repeating the process until the task is complete.

How Agentic AI Systems Improve Over Time

A traditional chatbot or RAG-based AI gives the same quality of answer every time: there’s no built-in way to improve based on past interactions. In contrast, agentic AI systems are fundamentally designed to adapt and get smarter the more they’re used. Let’s be blunt: most LLM-based agents today don’t “learn” the way humans do—they don’t retrain themselves on the fly. Instead, their improvement comes from three practical mechanisms:

1. Better Memory, Better Context

After each step, the agent records what happened (successes, failures, tool outputs, user corrections) into a structured memory store (often a vector database). When a similar task arises, the agent searches this memory for relevant past examples or error patterns, and uses them as context in prompts to the LLM. It’s not “learning” in the neural network sense, but smart context reuse.

Example:

If last week a tool failed when a certain API endpoint was down, and the agent sees the same error now, it can surface a workaround or warning using notes from that prior incident.

2. Prompt and Plan Adaptation

Feedback—both from the environment (tool errors, failed API calls) and users (“That’s not right!”)—is used to dynamically change prompts and plans. Here’s what that looks like in practice:

-

If a tool call fails, the planner can inject that error (“API timeout, try again in 5 min”) directly into the next LLM prompt, asking for an alternative plan or tool.

-

If a user corrects the agent (“Refunds should go to this team”), the agent can immediately update its next step or the rules it uses for routing.

-

The agent can use templates that automatically update based on recent outcomes (e.g., “If this fails three times, escalate”).

This is usually done by updating prompt templates, task flows, or “scratchpads” that the agent uses for reasoning—not by modifying the underlying LLM weights.

3. Human Feedback for Rules or Examples

In more advanced systems, when a user or admin corrects the agent, the correction is saved as a new rule or example in the agent’s memory (or a simple database). On future similar tasks, the agent retrieves this rule and bakes it into the plan or prompt.

Example:

-

“If customer is from Germany, use refund policy B, not A.”

-

The agent sees this correction, stores it, and next time auto-selects the correct policy—not because the model “learned,” but because the workflow logic adapted.

When to Use Agentic AI (vs Prompting or RAG)

If you just want smart answers, RAG is often enough.

If you want your AI to do things—coordinate steps, handle errors, adapt to context—you need Agentic AI. Here are the questions to help you with the right choice:

Q: Can this be handled in one step? ↳ Yes → Use Prompt Engineering ↳ No → Continue

Q: Is info static and self-contained? ↳ Yes → Fine-tune an LLM ↳ No → Use RAG

Q: Do you need multiple tools/actions in sequence, or to adapt mid-task? ↳ No → Tool-Augmented RAG ↳ Yes → Agentic AI

Agentic AI Isn’t Just a Trend—It’s the New Baseline

Agentic AI represents a real shift in how we build and deploy intelligent systems. Instead of just answering questions, these new AI architectures can plan, act, remember, and improve—bringing true autonomy to digital workflows. As tools get smarter and memory becomes more sophisticated, agentic approaches will move from buzzword to best practice, powering everything from customer support to research, robotics, and beyond. If you’re building anything more than Q&A, it’s time to start thinking—and building—agentically.